Affetto is a last generation robot developed and created by the scientists of the Osaka University. You may wonder what’s so special about it and the answer is that Affetto can simulate a wide range of human emotions in the way it reacts to external stimuli – including pain.

Robotic research keep getting more and more sophisticated, with Artificial Intelligence reaching brand-new levels of complexity. In recent times, neural networks were able to pinpoint eleven potentially dangerous asteroids hitherto unknown to scientists; you can find out more on this page.

The development and building of advanced robot able to emulate human feelings has been taken up another notch. Robots built after Affetto will be increasingly reactive to their surrounding stimuli and more “human-like” than they’ve ever been.

The child robot from Osaka

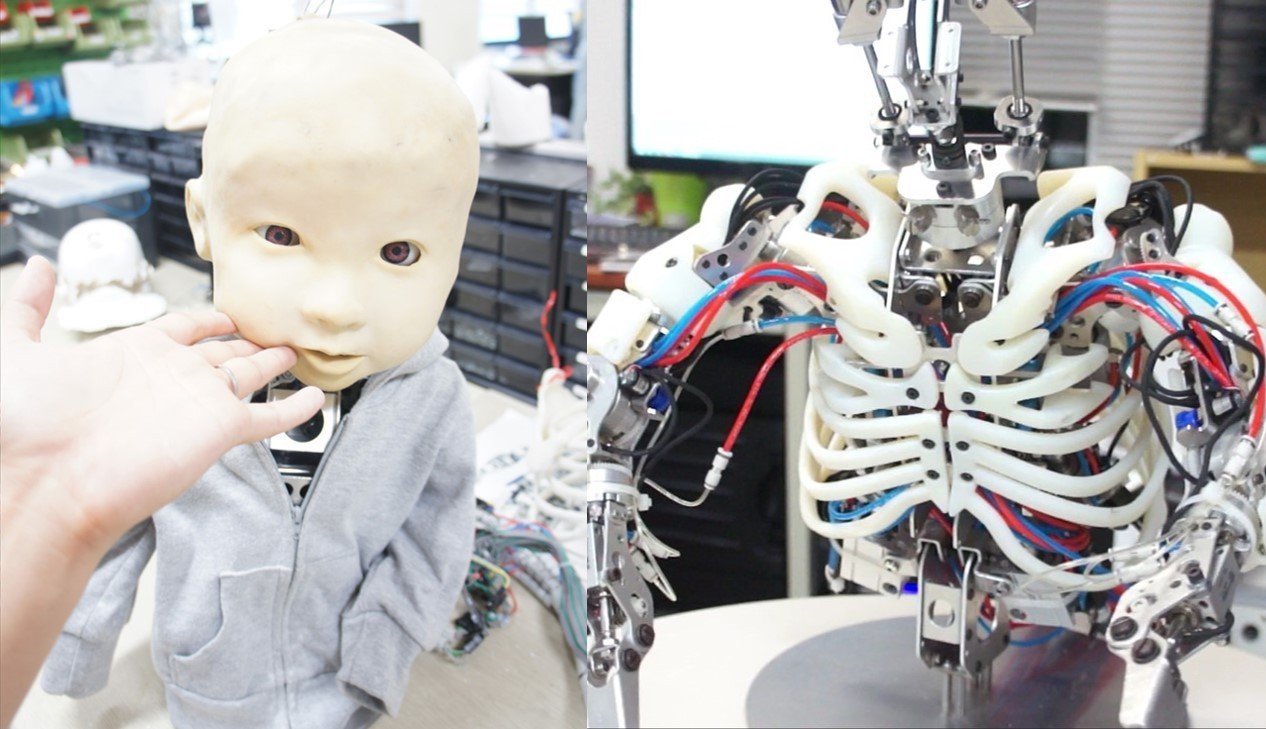

Affetto, the robot child, was created way back in 2011 in the Osaka University (Japan) by professor Hisashi Ishihara and his team, including Binyi Wy and Mnoru Asada.

When the robot first debuted in the scientific world, its appearance was far more ghastly than today. Its face was a crude approximation of a toddler, aged two or three.

The head was connected to an exoskeleton designed to look roughly like a mechanical human torso.

Initially Affetto could react only to a selected range of external stimuli. However, the first results were promising enough to prompt further research.

The “child” android was able to interface with its surroundings and process tactile stimulations – both pleasing (a caress) and painful (an electric shock) alike.

Creating human expressions in an android – is it possible?

Affetto was allegedly able to “feel” pain, but the question was how to have it express its feelings outwardly? The robot’s artificial face in the early stages was far less mobile than it is today, and its range of expressions was very limited.

Mimicking the vast amount of micro-movements that a human face is capable of is no easy feat, due to the great amount of free nerve endings found in human skin. Robotic engineering, however, was never put off by this arduous challenge.

Scientists thus set out to artificially recreate artificial skin that would be as close as possible to real human skin – adapting to the smallest movements of the android’s face, and allowing it to express feelings and emotions in an intelligble way.

Research in this field is increasingly evolving. Last-generation robots can recognize and convey a much wider range of different emotions than ever before.

Meanwhile, the Artificial Intelligences powering the androids can compute external stimuli and situations much as a human brain would do.

Affetto can cry out and frown when hurt

The newest version of the android child, Affetto, has been noticeably improved if compared to earliest prototypes.

Its face now resembles more closely that of a real child. While the artificial skin still can’t replicate perfectly every single human mimicry, it’s by far more realistic than its eerily doll-like predecessor.

Affetto can thus express its feelings and emotions, not differently from the way a human child would.

Upon its activation, the robotic child’s default expression is both curious and attentive to its surroundings. For example, Affetto can look around itself in an attempt to locate a sound source.

Likewise, after receiving a powerful electric shock, Affetto winces and opens it mouth in a silent cry of pain.

Owing in no small part to a much more fluid eye movement, the robot child‘s range of expressions may very well be described as heart-wrenching.

Why are we so sympathetic to Affetto?

When Affetto is seen wincing and crying in pain, only a few people can remain unaffected. Most viewers feel the powerful urge to comfort the robot child.

Even though Affetto is still a far cry from a real human child replica, seeing it sniffle miserably still triggers a strong emotional response in human beings.

We are genetically programmed to feel sympathy toward Affetto due to our epimeletic behavior, that would be an instinctive (and purely instinctual) urge to take care of what we perceive as a youngster.

Our sympathy for Affetto is no different from what we’d feel for a puppy or a kitten in distress, as human beings can not only feel empathy, but project it outwardly to different specimen. Other animals, too, can display strong inter-species protectiveness toward orphaned or ailing youngs.

However, this is not the case with Artificial Intelligences. AIs are, as of yet, unable to project their feelings beyond the personal plane. The only stimuli and perceptions that an AI may process are tactile or emotional responses that involve its neural network first-hand.

Hence the next, inspiring new challenge of robotics. The androids of tomorrow will be capable not just of emotive but emphatic feedback as well. Unsurprisingly, Japan was one of the first nations to rise to the challenge, as empathic robots could provide a reliable support and company to an increasingly elderly population.

Still, if Kubrik’s iconic “A.I – Artificial Intelligence” moved you to tears, you may be similarly touched by Affetto, the robot child that feels pain.

This post is also available in: